It’s been a while that this blog post about Typescript Generics was in my drafts (not sure why) and finally it is out. 🤞But before we get into Generics, we should be aware of what makes Types in Typescript exciting.

Typescript allows us to type check the code so that errors can be caught during compile time, instead of run time. For example, the following code in Javascript may look correct at compile time but will throw an error in a browser or NodeJS environment.

const life = 42;

life = 24; // OK at compile time

In this case, Typescript may infer the type of the variable life based on its value 42 and notify about the error in the editor/terminal. Additionally, you can specify the correct type explicitly if needed:

const life: number = 42;

life = 24; // Throws an error at compile time

Named Types

So there are few primitive types in Typescript such as number, string, object, array, boolean, any, and void (apart from undefined, null, never, and recently unknown). However, these are not enough, especially when we use them together in a large project and we might need a sort of an umbrella type or a custom type for them to hold together and be reusable. Such aliases are called named types which can be used to create custom types. They are classes, interfaces, enums, and type aliases.

For example, we can create a custom type, MyType comprising a few primitive types as follows.

interface MyType {

foo: string;

bar: number;

baz: boolean;

}

But what if we want foo to be either string or object or array!? One way is to copy the existing interface to MyType2 (and so on).

interface MyType2 {

foo: Array<string>;

bar: number;

baz: boolean;

}

The way we pass any random value to a function with the help of a function parameter to make the function reusable, what if we allowed to do the same for MyType as well. With this approach, the duplication of code will not be needed while handling the same set but different types of data. But before we dive into it, let us first understand the problem with more clarity. And to understand it, we can write a cache function to cache some random string values.

function cache(key: string, value: string): string {

(<any>window).cacheList = (<any>window).cacheList || {};

(<any>window).cacheList[key] = value;

return value;

}

Because of the strict type checking, we are forcing the parameter value to be of type String. But what if someone wants to use a numeric value? I’ll give you a hint: what operator in Javascript do we use for a fallback value if the expected value of a variable is Falsy? Correct! we use || a.k.a. logical OR operator. Now imagine for a second that you are the creator of Typescript Language (Sorry, Anders Hejlsberg) and willing to resolve this issue for all developers. So you might go for a similar solution to have a fallback type and after countless hours of brainstorming, end up using a bitwise operator i.e. | in this case (FYI, that thing is called union types in alternate dimensions where Anders Hejlsberg is still the creator of Typescript).

function cache(key: string, value: string | number): string | number {

(<any>window).cacheList = (<any>window).cacheList || {};

(<any>window).cacheList[key] = value;

return value;

}

Is not that amazing? Wait, but what if someone wants to cache boolean values or arrays/objects of custom types!? Since the list is never ending, looks like our current solution is not scalable at all. Would not it be great to control these types from outside!? I mean, how about we allow to define placeholder types inside the above implementation and provide real types from the call site instead.

Generic Function

Let us use ValueType (or use any other placeholder or simply T to suit your needs) as a placeholder wherever needed.

function cache<ValueType>(key: string, value: ValueType): ValueType {

(<any>window).cacheList = (<any>window).cacheList || {};

(<any>window).cacheList[key] = value;

return value;

}

We can even pass the custom type parameter MyType to the cache method in order to type check the value for correctness (try changing bar‘s value to be non-numeric and see for yourself).

cache<MyType>("bar", { foo: "foo", bar: 42, baz: true });

This mechanism of parameterizing types is called Generics. This, in fact, is a generic function.

Generic Classes

Similar to the generic function, we can also create generic classes using the same syntax. Here we have created a wrapper class CacheManager to hold the previously defined cache method and the global (<any>window).cacheList variable as a private property cacheList.

class CacheManager<ValueType> {

private cacheList: { [key: string]: ValueType } = {};

cache(key: string, value: ValueType): ValueType {

this.cacheList[key] = value;

return value;

}

}

new CacheManager<MyType>().cache("bar", { foo: "bar", bar: 42, baz: true });

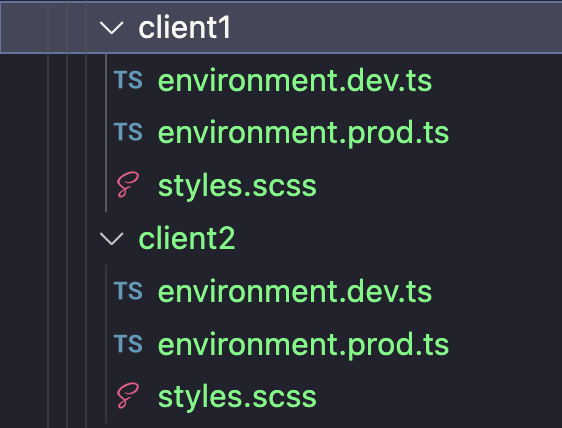

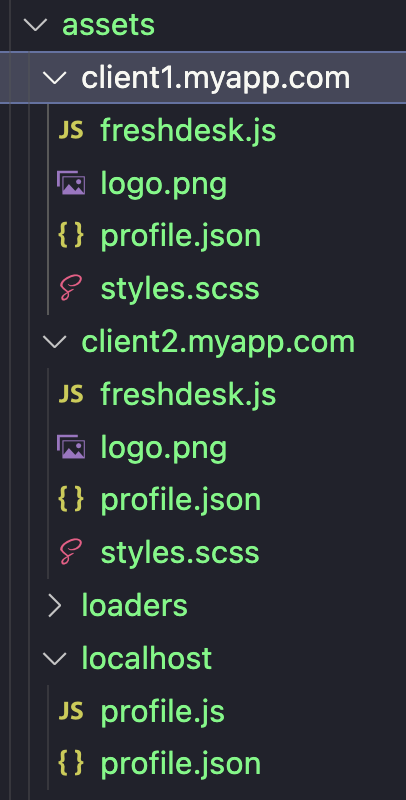

Even though the above code is perfect to encourage reusability of CacheManager while caching all types of values, but someday in future, there will be a need to provide varying types of data in MyType‘s properties as well. That exposed us to the original problem of MyType vs MyType2 (from the Named Types section above). To prevent us from duplicating the custom type MyType to accommodate varying types of properties, Typescript allows using generic types, even with interfaces, which makes them Generic Interfaces. In fact, we are not restricted to use only one parameter type below, use as many as needed. Additionally, we can have union types as a fallback to the provided parameter types. This permits us to pass an empty {} object while using the generic interface wherever we desire the default types of values.

interface MyType<FooType, BarType, BazType> {

foo: FooType | string;

bar: BarType | number;

baz: BazType | boolean;

}

new CacheManager<MyType<{},{},{}>>().cache("bar", { foo: "bar", bar: 42, baz: true });

new CacheManager<MyType<number, string, Array<number>>>().cache("bar", { foo: 42, bar: "bar", baz: [0, 1] })

I know that it looks a bit odd to pass {} wherever the default parameter types are used. However, this has been resolved in Typescript 2.3 by allowing us to provide default type arguments so that passing {} in the parameter types will be optional.

Generic Constraints

When we implicitly or explicitly use a type for anything such as the key parameter of the cache method above, we are purposefully constraining the type of key to be of type String. But we are inadvertently allowing any sort of string here. What if we want to constraint the type of key to be the type of one of the interface properties of MyType only!? One naive way to do so is to use String Literal Types for key below and in return get [ts] Argument of type '"qux"' is not assignable to parameter of type '"foo" | "bar" | "baz"'error when the key type qux does not belong to MyType. This works correctly, however, because of the hard-coded string literal types, we can not replace MyType with YourType interface since it has different properties associated with it as follows.

interface YourType {

qux: boolean;

}

class CacheManager<ValueType> {

private cacheList: { [key: string]: ValueType } = {};

cache(key: "foo" | "bar" | "baz", value: ValueType): ValueType {

this.cacheList[key] = value;

return value;

}

}

new CacheManager<MyType<{},{},{}>>().cache("foo", { foo: "bar", bar: 42, baz: true }); // works

new CacheManager<MyType<{},{},{}>>().cache("qux", { foo: "bar", bar: 42, baz: true }); // !works

new CacheManager<YourType>().cache("qux", { qux: true }); // !works but should have worked

To make it work, we’ve to manually update the string literal types used for key each time in the class implementation. However, similar to Classes, Typescript also allows us to extend Generic Types. So that we can get away with the string literal types "foo" | "bar" | "baz" of key with the generic constraint i.e. KeyType extends keyof ValueType as follows. Here, we are forcing the type-checker to validate the type of key to be one of the interface properties provided in CacheManager. This way we can even serve previously mentioned YourType without any error.

class CacheManager<ValueType> {

private cacheList: { [key: string]: ValueType } = {};

cache<KeyType extends keyof ValueType>(key: KeyType, value: ValueType): ValueType {

this.cacheList[key] = value;

return value;

}

}

new CacheManager<MyType<{},{},{}>>().cache("foo", { foo: "bar", bar: 42, baz: true }); // works

new CacheManager<MyType<{},{},{}>>().cache("qux", { foo: "bar", bar: 42, baz: true }); // !works

new CacheManager<YourType>().cache("qux", { qux: true }); // works

Alright, that brings us to the climax of Generics. I hope \0/ Generocks \m/ for you.

If you found this article useful in anyway, feel free to

donate me and receive my dilettante painting as a token of appreciation for your donation.