This is my 2nd science-fiction short story (the 1st one is here). It is a tribute to the Frontend Engineers and how their life and job would change in the future due to the advent of ML/AI.

Special thanks to Aamna, Roshan, and Abhishek for grammatical/punctuation improvements and the overall constructive feedback.

Please star or comment to provide your valuable feedback, whatever it may be. I’m immune to anything hurls at me 😅.

IN A VACANT BUT CHAOTIC CABIN, a soul, deep in reverie entered. The door plate had a caption that read, “Yesterday’s gadgets were in front of you, Today’s gadgets are in your hands, and Tomorrow’s gadgets will be right within you”. That was the most influential gift his grandpa had given him at the age when he knew the only use of a tongue in his mouth was to tease others. Now, he was in his late twenties and worked part-time as an A.I specialist for many years. Dare not to gaze in awe since everybody was an A.I. specialist these days, mostly due to high demand and high wages in return compared to other jobs or sarcastically no jobs. He recalled the “There is an APP for that!” story often shared by his grandpa to justify the current situation. Because of the rampant use of Machine Learning tools and techniques, making something smarter was a piece of cake now. Off-course, you’ve to dig deeper to improve the existing Machine Learning models or invent your own for an unprecedented result.

Dave was an autodidact who inhabited his working space. The room was furnished with the aesthetically minimalistic design which gave the impression of everything fairy. One side of the room, the door facing wall was painted pinkish blue, only to warp it nicely or rather gel with other drabbed walls. Silvery photo frame, containing meaningless photos in baker’s dozen, was planted on one of the drabbed walls in a shape of Kalpataru tree. It was connected to the Line (was called Internet before) as pictures that were framed-in changed randomly or perhaps as per the mood and emotions emanated by the people around. It turned out that even the walls changed colors and patterns by injunction.

There was no source of open vents, but still, it was quite frigid in there for anybody to retreat on chaise longue—cemented far away at the corner to rest for eternity.

Another wall, facing east had big nestled sliding windows for sunlight and thereby for warmth to slip through. As usual, the Sun had shone on the sliding windows from outside like a stage lighting. Looking down with half-shut eyes through the glass confirmed that the cabin was a part of 100-story construction erected from quite above the ground. Might be lodged on top of some flat structure below but could not be seen from up above. Even the surroundings were occupied with similar but sufficiently spaced out constructions.

Adjacent to the sliding windows, the stacked circular knobs controlled the artificial light—sunlight to be precise supposing the sun was not around—emerging from the glass to populate the room since no working hours were pre-defined.

Most of the things were wall mounted but the only exception was Dave’s workstation. Long gone were days when people in the past profoundly designated themselves as software engineers. In those days, their work and identity extended beyond mere programming software or so-called coding: analyzing project requirements, brainstorming with a team, creating prototypes, beta testing with handful of early adopters, fixing bugs, churning out new features, and many more such activities had made engineering the nail-biter job in a good way. But things had changed drastically and with unexpected swiftness since then.

Now they were called experts.

When people stopped doing traditional searches on various search engines back then, the obvious progression for many of those search engines was to become Digital Personal Assistants. Many could not fathom the idea and some died due to competition and so to speak complexity in giving accurate answers. Today, they collaborate or ironically fraternize themselves with Intelligent Digital Personal Assistant (IDPA) for any software related work as if humans and machines had switched roles. Even the schism between frontend and backend had become illusory.

Nowadays these experts had been trusting IDPAs more than their instincts when it came to problem-solving. By the same token, this probably might have made the software engineer term obsolete in this era.

When Dave entered the room, IDPA wished him welcome, “Good Morning, Dave!” and reminded in a soothing female voice, “I’m stoked to work alongside you on a new project today.”, followed by a giggle. Then it auto played his favorite song when Dave said nothing in return, deadpan. Dave was mulling over a problem he had been carrying in his head since last night. Yeah, that was one of the things he was allowed to do until A.I. figured out to do of its own; speculating the exponential growth in technology, it will happen soon.

While the song reached its peak, Dave slingshotted himself off to a fridge, fetched various flavors of staple meal as much as he could clasp, and hurriedly trotted near his desk before he unintentionally dribbled everything on the matted floor. In the meantime, his workstation lit up automatically when it sensed him near. IDPA had recognized Dave, booted his monitor instantly or it just reappeared magically as if someone tossed it in the realm of the 3D from the unknown 4D world, while he was busy tearing off one of the staple meals he just lugged.

After some time when his mouth was full enough to chew some of it, he transferred the bowl in one hand and threw the remaining meal bags on the other side of the desk. Then buried himself in the ergonomic chair, chewing vigorously raised one hand with the palm facing the drabbed wall, like a rocket flies off the surface, only to stop the music.

He turned his face squarely towards the computer screen, affixed to the table. Then stared at it for long enough, making the surroundings blurry after a while, almost nonexistent as if he was floating on a cloud.

He was alone. Working From Home Forever.

AS USUAL, HE STUFFED the remaining meal into his mouth and set the bowl away, literally slid it far across the desk to never grab it again. Dave began his day by skimming over unread messages first, in a nick of time, as he had already linked IDPA to a universal messaging gateway.

Back in the old days, when the poorest of the poor were given the affordable internet, it became obvious that accumulating such a huge data by just one company would have been hazardous. Thence the market leaders from various countries back then were the first to realize that something needs to be done to avoid the draconian future. So these early liberators decided to come to terms with it and had discerned of creating the universal platform to amalgamate the disintegrated tribes. Eventually, their efforts gave birth to the universal messaging gateway. It had been developing as the open project since then, mainly consisted of a mixture of open APIs and a distributed file system at its helm. This meant, no one company would be held accountable for owning the public data, not even the government by any means.

With such an architecture at its core, even the smallest entrepreneur delivering weed at home could use the messaging gateway to notify its customers, for anything. The weed delivery Page in ARG, however, has to provide hooks for the universal messaging gateway to pull in real-time updates and notify those customers in a scalable manner. Later similar strategy was used with IDPA so that any kind of requests could be made without even installing the Page. Just give a command to your IDPA and boom!

The Universal Messaging Gateway now happened to be the common but much powerful interface to access all sorts of messages coming from family members, friends, colleagues, ITS, IoT home appliances, and all sorts of fairy things mounted around the room of Dave. And from strangers too—spam detection was an unsolved problem. It would worsen though, some said.

IDPA moved few important incoming messages on top automatically while the overwhelming majority of such messages were grouped separately that needed Dave’s consideration. Although, some experts prefer to check out each message manually by disabling IDPA (the icon resembles a bot head placed atop the human abdomen) in order to vindicate their amour-propre, but for Dave that just saved his time.

Predominantly, the incoming messages would be resolved instantly, in a moment as soon as they arrive, by IDPA itself only if they are project specific. But those replies were unerringly apt as if Dave himself were involved. Although, Dave could see such messages on the right side of the screen. He prodded on a flat sheet of a keyboard which was paired with ARG to close the list of auto-replied messages in order to shift his focus to the important ones in the center. Now went full screen with a gesture.

After a while, IDPA voiced sympathetically, “The injunction to be nice is used to deflect criticism and stifle the legitimate anger of dissent”.

It was one of the famous quotes fetched furtively over the wire when Dave was busy smashing keys. In this case, IDPA dictated him not be rude.

SPLAYED ACROSS THE BOTH EARDRUMS in a stream of steep hum was the reminder of an upcoming live code conference, happening at the luxurious resort. Dave supposed to be attending it but woefully caught up with urgency. Earlier he had watched such events remotely without any privation despite the fact that one had to be physically present at the venue to grab various sponsored tech goodies for free.

He welted on the notification to start a live stream that swiftly covered the preoccupied screen in ARG as if a black hole had swallowed a glittering star in oblivion.

Having himself competitively gazing at the live stream was not rendered on the real computer screen. The projection of the virtual computer screen, made via ARG (he was wearing), was of a shape of a glowing 3D rectangle. Having glowy, it was not fairy though. It looked almost real as if it was materialized there, and moreover, others could see it too if they were on the same Line. Further, he would stretch the screen to suit his needs. Sometimes he would transmogrify it into multiple screens for more arduous tasks. Inevitably, he would start the new project today, so a single screen. But wider.

Augmented Reality Goggle (ARG) was a small device of the shape of a cigarette, mounted behind both ears, which made this possible. It zip-tied to the top of his left and right auricles and connected by a thin wire from behind, someday to talk to the amygdala to send data via neural signals to enable brain-to-brain communications. High definition cameras and mics were attached to both aft and rear ends of the device so that a 360 video feed could be viewed or captured.

By the same token, ARGs were of equal stature to human eyes.

Ruffling his hair with left hand, Dave reposed himself in the chair when chattering noise coming from the remote conference advanced. Still, plenty of time remained to start a keynote though.

ARG had been upgraded beyond what it was during its nascent stage. Now you would beam your reality around you for others to experience in real-time. Similarly, the conference sponsors had broadcasted the whole conference hall in a 360-degree video feed that anybody—and most importantly from anywhere would tap into. That way, Dave could see everyone who was attending the conference physically as if he was with them. Even the conference attendees use ARGs during the live conference instead of watching directly with their naked eyes; mostly for fancy bits that you would not see otherwise.

Strips of imagery scrolled in the line of sight when Dave observed the conference hall in a coltish manner for some familiar faces. With a mere gesture, you could hop person to person to face them as if you were trying to make an eye contact to begin a conversation with. The only difference here was that the person on the other side would not know of until you sent a hi-five request.

Dave stiffened for a moment and looked for his best friend with the keen observation who was physically attending the conference this time. “There he is!”, Dave shouted aimlessly. Before sending him a hi-fi request, Dave flipped his camera feed by tapping on the computer screen to face him, and drew a blooming rectangle on it, starting with a pinch in the middle of the screen and then both fingers going away from each other, that captured his face down to his torso like a newspaper cut out. That was, as a matter of fact, the only subset from his untidy reality that would be broadcasted when conversing. He flipped back the screen to encounter his friend and sent him the hi-five. They retreated and discussed the technology in the midst of laughter and jokes until the conference began.

Subsequently, Dave shifted gears and colonized the chair and the escritoire in the room with his feet to watch the keynote for a few productive hours.

HOPPED OVER A CODE EDITOR when Dave reclined in the chair with the satisfied feeling after watching his favorite conference. Dave’s job was to find various ways to make IDPA and related AI machinery astute. On this day, however, he would spend his time building a Page a.k.a. ARG application.

The code editor opened all those files he left unclosed last night. Then it was overlapped with a small notification dialog about pending reviews. Dave resisted the urge to cancel the notification, for he knew that he would need complete focus for the new project starting today, to not leave his colleagues hanging in the air.

In the past, some people were worried about Software eating the world but lo and behold, software ate the Hardware too. Software as a Service was the norm back then but after few years some smart folks thought of Hardware as a Service and it was a game changer. The result of that, today, if you need a new machine to run any software, you just have to launch AHS Page (Augmented Hardware Services) in ARG and choose the likes of configurations you prefer in terms of RAM, Graphics card, HD display, Storage, and whatnot—Up and Running workstation in no time and far cheaper. After this setup, all you need was a high-speed Fiber Line which was pretty commonplace nowadays. ARG lets you connect to the Line which in turn allows you to interact with the workstation (and many things) in Augmented Reality. It is, in other words, the entire operating system and the likes of Pages you need at the moment, all run on the cloud and then projected in your field of view.

That way the Dave’s code editor was rendered too.

Dave engaged and finished with the review without further ado except at one place wherein according to Dave it needed a personal touch. So he wrote a polite explanation to prove his point with a mundane graph drawn using arrows (–>), dashes (—), dots (…), and attached the video recording along with it—meaning he just had an idea of making the program extensible and immune to future requirement (Still a dream). Then moved on to face the new instance of the code editor. Before he began thinking of the new project, IDPA prompted unconventionally in his ears, “Dave, I would like to inform you that you had forgotten to submit the review”. Dave quickly submitted it with embarrassment. With revengeful sense, Dave teased patiently to IDPA,

“What is life?”

“I know you are not interested in scientifically accurate answer”, rebutted IDPA in disguise after sensing Dave’s intention, “but to me (and you too), it’s a ToDo list!”.

Dave ought to take revenge, but instead defeated by the machine, he conceded his defeat and decided to divert his attention to the job at hand.

WHEN THE USE OF MACHINE LEARNING techniques soared, many programmers gave up on traditional UI/UX development and in fact focused on training the machines to do so. They long ago predicted, if it was achieved to the level of human intelligence, it would save time and money for many. Today, IDPA was not that profound when it comes to creatively lay out a design on an empty canvas on its own. Instead it relies a lot on existing designs and trends only to come up with a somehow similar but little bit different designs compared to the rest. Although, it’s not imaginatively creative at all but still, Dave was optimistic for having IDPA on his side today.

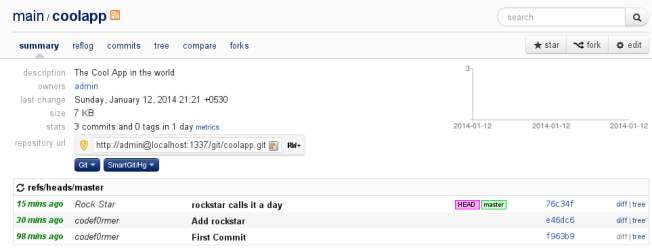

IDPA built into the code editor was given a simple command to fetch the designs for the new project from the cloud repository. The new project was about a newly launched Flying Commercial Vehicle which was as compact as a 4-seater electric car but flies up too without the need of big rotor systems. Dave was given the task of creating an ARG Page that must include various shots of the Vehicle from all angles, using which people around the world can make bookings from anywhere. When he fed those details to IDPA from within the code editor, it quickly brainstormed in nanoseconds and churned out a design which made Dave sad—not because it was bad but because it looked the same as hundreds of other Pages he had seen before. Most importantly, he did not like the placement of the snapshots and the details provided.

There was one more way although, especially, for those who still had some artistry left in them when it came to design Pages. He briskly drew boxes on a blank canvas in a circular form making up the circle of life (as if that’s the last thing people needed to complete them), filled some of them with random texts, and marked certain areas where the snapshots must be. Then fed his magnum opus to IDPA which produced the Page out of it instantly. On the same line, he drew few more things to capture the booking details and then have it Page‘d too. In the meanwhile, IDPA slapped some statistics on the screen, apart from some not-so-important maths, it showed file size of the compiled Pages vs the original design files. That made Dave sighed in satisfaction as if humans had contacted an Alien Race they could talk to.

Dave went through the created Pages just to read information about the vehicle, mentioned next to each angled shot. Looks like he was on fire today since ideas kept coming to him in order to make the current Page design even better. Now he could either go back to the drawing board or make edits in the existing Pages himself. He thought for a moment and decided to go with the latter option, that is, to open an Interface Builder. With a flick of a button it literally transformed the code editor UI into the interface builder UI, snapping a bunch of pallets on each side, only to assist him.

Dave focused on the current design to change it the way he intended and also fixed few design errors that the intelligent interface builder suggested, given the best practices and performance incentives. It was intelligent for a reason since it had added appropriate validations on the data fields automatically that supposed to capture the registration details of people who wanted to buy the vehicle. The only thing Dave had to do was connect the same to the cloud data storage which was the kid’s play. So he picked one of the few available cloud storage engines to save the data and pelted the finish button that in turn compiled the final ARG application that he then pushed live on the ARGStore which was the one-stop destination to host all sorts of ARG applications now.

This had become the reality now since browsers are long dead and the Web, if you may know, had been transfigured beyond recognition.

Dave saw IDPA holding up on some news since a long time, not to disturb him. He unmuted it only to know that some researchers cracked the way how Brain creatively thinks and he determined to let go of the thoughts he was mulling over since the previous night.

If you liked this story in anyway, feel free to

donate me and receive my dilettante painting as a token of appreciation for your donation.